How Long Does A Process Server Have To Wait Between Service Attempts?

In computing, scheduling is the action of assigning resources to perform tasks. The resources may be processors, network links or expansion cards. The tasks may exist threads, processes or data flows.

The scheduling activity is carried out by a procedure called scheduler. Schedulers are often designed then as to keep all computer resources decorated (as in load balancing), allow multiple users to share system resources effectively, or to achieve a target quality-of-service.

Scheduling is fundamental to computation itself, and an intrinsic part of the execution model of a computer arrangement; the concept of scheduling makes information technology possible to accept computer multitasking with a single central processing unit (CPU).

Goals [edit]

A scheduler may aim at one or more goals, for case:

- maximizing throughput (the full amount of work completed per time unit);

- minimizing wait time (time from work becoming ready until the first point it begins execution);

- minimizing latency or response fourth dimension (time from piece of work becoming ready until it is finished in case of batch action,[1] [ii] [three] or until the system responds and hands the commencement output to the user in case of interactive activity);[4]

- maximizing fairness (equal CPU fourth dimension to each process, or more generally appropriate times according to the priority and workload of each process).

In practise, these goals frequently conflict (east.grand. throughput versus latency), thus a scheduler volition implement a suitable compromise. Preference is measured by whatever one of the concerns mentioned above, depending upon the user's needs and objectives.

In real-fourth dimension environments, such as embedded systems for automatic command in industry (for instance robotics), the scheduler besides must ensure that processes can run into deadlines; this is crucial for keeping the system stable. Scheduled tasks can also be distributed to remote devices across a network and managed through an administrative dorsum end.

Types of operating system schedulers [edit]

The scheduler is an operating system module that selects the adjacent jobs to be admitted into the arrangement and the next procedure to run. Operating systems may feature up to three distinct scheduler types: a long-term scheduler (too known as an admission scheduler or loftier-level scheduler), a mid-term or medium-term scheduler, and a short-term scheduler. The names suggest the relative frequency with which their functions are performed.

Process scheduler [edit]

The procedure scheduler is a part of the operating system that decides which process runs at a sure point in fourth dimension. It usually has the ability to pause a running process, motility information technology to the dorsum of the running queue and first a new process; such a scheduler is known as a preemptive scheduler, otherwise it is a cooperative scheduler.[v]

We distinguish between "long-term scheduling", "medium-term scheduling", and "brusque-term scheduling" based on how oftentimes decisions must be made.[half dozen]

Long-term scheduling [edit]

The long-term scheduler, or admission scheduler, decides which jobs or processes are to be admitted to the set up queue (in main memory); that is, when an endeavour is made to execute a program, its admission to the gear up of currently executing processes is either authorized or delayed by the long-term scheduler. Thus, this scheduler dictates what processes are to run on a system, and the degree of concurrency to be supported at any one time – whether many or few processes are to be executed concurrently, and how the split between I/O-intensive and CPU-intensive processes is to be handled. The long-term scheduler is responsible for controlling the degree of multiprogramming.

In general, most processes tin can be described equally either I/O-bound or CPU-leap. An I/O-bound process is 1 that spends more of its time doing I/O than it spends doing computations. A CPU-bound process, in contrast, generates I/O requests infrequently, using more of its time doing computations. Information technology is important that a long-term scheduler selects a adept process mix of I/O-bound and CPU-bound processes. If all processes are I/O-bound, the ready queue will most always be empty, and the brusque-term scheduler volition take little to do. On the other hand, if all processes are CPU-leap, the I/O waiting queue volition most always exist empty, devices volition get unused, and again the organization will be unbalanced. The organisation with the best performance will thus have a combination of CPU-bound and I/O-bound processes. In mod operating systems, this is used to make certain that existent-time processes get enough CPU time to terminate their tasks.[7]

Long-term scheduling is also important in large-calibration systems such as batch processing systems, computer clusters, supercomputers, and return farms. For instance, in concurrent systems, coscheduling of interacting processes is frequently required to prevent them from blocking due to waiting on each other. In these cases, special-purpose task scheduler software is typically used to assistance these functions, in add-on to any underlying access scheduling support in the operating system.

Some operating systems only allow new tasks to be added if it is sure all real-fourth dimension deadlines tin still be met. The specific heuristic algorithm used by an operating system to accept or decline new tasks is the admission command mechanism.[viii]

Medium-term scheduling [edit]

The medium-term scheduler temporarily removes processes from chief memory and places them in secondary memory (such as a hard disk drive) or vice versa, which is usually referred to as "swapping out" or "swapping in" (also incorrectly equally "paging out" or "paging in"). The medium-term scheduler may decide to swap out a process which has non been agile for some time, or a process which has a low priority, or a process which is folio faulting frequently, or a procedure which is taking upwardly a large corporeality of retentivity in social club to free up main retentivity for other processes, swapping the process dorsum in later when more memory is available, or when the process has been unblocked and is no longer waiting for a resource. [Stallings, 396] [Stallings, 370]

In many systems today (those that back up mapping virtual address space to secondary storage other than the bandy file), the medium-term scheduler may actually perform the part of the long-term scheduler, by treating binaries as "swapped out processes" upon their execution. In this style, when a segment of the binary is required it tin can be swapped in on demand, or "lazy loaded",[Stallings, 394] too called demand paging.

Short-term scheduling [edit]

The brusk-term scheduler (likewise known every bit the CPU scheduler) decides which of the ready, in-memory processes is to be executed (allocated a CPU) after a clock interrupt, an I/O interrupt, an operating organization telephone call or another form of bespeak. Thus the short-term scheduler makes scheduling decisions much more frequently than the long-term or mid-term schedulers – a scheduling determination will at a minimum take to be made later every time slice, and these are very curt. This scheduler can be preemptive, implying that it is capable of forcibly removing processes from a CPU when it decides to allocate that CPU to another procedure, or not-preemptive (as well known equally "voluntary" or "branch"), in which case the scheduler is unable to "force" processes off the CPU.

A preemptive scheduler relies upon a programmable interval timer which invokes an interrupt handler that runs in kernel mode and implements the scheduling function.

Dispatcher [edit]

Another component that is involved in the CPU-scheduling function is the dispatcher, which is the module that gives control of the CPU to the process selected by the short-term scheduler. It receives control in kernel mode as the result of an interrupt or arrangement phone call. The functions of a dispatcher involve the following:

- Context switches, in which the dispatcher saves the country (also known as context) of the process or thread that was previously running; the dispatcher then loads the initial or previously saved land of the new procedure.

- Switching to user mode.

- Jumping to the proper location in the user plan to restart that program indicated by its new state.

The dispatcher should exist as fast equally possible, since it is invoked during every process switch. During the context switches, the processor is about idle for a fraction of time, thus unnecessary context switches should exist avoided. The time information technology takes for the dispatcher to stop one process and start another is known as the dispatch latency.[seven] : 155

Scheduling disciplines [edit]

A scheduling subject area (likewise called scheduling policy or scheduling algorithm) is an algorithm used for distributing resource amongst parties which simultaneously and asynchronously request them. Scheduling disciplines are used in routers (to handle bundle traffic) equally well as in operating systems (to share CPU fourth dimension amidst both threads and processes), deejay drives (I/O scheduling), printers (impress spooler), most embedded systems, etc.

The main purposes of scheduling algorithms are to minimize resource starvation and to ensure fairness amid the parties utilizing the resources. Scheduling deals with the problem of deciding which of the outstanding requests is to be allocated resources. At that place are many unlike scheduling algorithms. In this department, we introduce several of them.

In packet-switched computer networks and other statistical multiplexing, the notion of a scheduling algorithm is used every bit an alternative to start-come first-served queuing of data packets.

The simplest best-effort scheduling algorithms are round-robin, fair queuing (a max-min off-white scheduling algorithm), proportional-off-white scheduling and maximum throughput. If differentiated or guaranteed quality of service is offered, as opposed to best-effort communication, weighted off-white queuing may be utilized.

In advanced bundle radio wireless networks such equally HSDPA (High-Speed Downlink Package Access) 3.5G cellular system, aqueduct-dependent scheduling may be used to accept reward of channel land information. If the aqueduct atmospheric condition are favourable, the throughput and organisation spectral efficiency may be increased. In even more advanced systems such every bit LTE, the scheduling is combined by aqueduct-dependent parcel-by-parcel dynamic channel resource allotment, or past assigning OFDMA multi-carriers or other frequency-domain equalization components to the users that best can utilise them.[9]

First come, first served [edit]

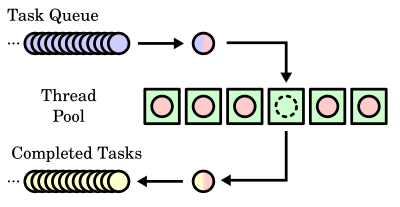

A sample thread pool (green boxes) with a queue (FIFO) of waiting tasks (blueish) and a queue of completed tasks (yellow)

First in, first out (FIFO), also known as first come up, get-go served (FCFS), is the simplest scheduling algorithm. FIFO simply queues processes in the society that they arrive in the set up queue. This is usually used for a task queue , for example as illustrated in this section.

- Since context switches but occur upon process termination, and no reorganization of the process queue is required, scheduling overhead is minimal.

- Throughput tin can exist depression, because long processes can be belongings the CPU, causing the short processes to wait for a long fourth dimension (known equally the convoy outcome).

- No starvation, because each process gets chance to be executed later a definite fourth dimension.

- Turnaround fourth dimension, waiting time and response time depend on the order of their arrival and can exist high for the same reasons to a higher place.

- No prioritization occurs, thus this organisation has trouble coming together procedure deadlines.

- The lack of prioritization means that every bit long as every process somewhen completes, there is no starvation. In an surround where some processes might non complete, there tin can exist starvation.

- Information technology is based on queuing.

Priority scheduling [edit]

Earliest deadline get-go (EDF) or least time to go is a dynamic scheduling algorithm used in real-time operating systems to place processes in a priority queue. Whenever a scheduling upshot occurs (a chore finishes, new job is released, etc.), the queue volition be searched for the process closest to its deadline, which will be the side by side to be scheduled for execution.

Shortest remaining time first [edit]

Similar to shortest job first (SJF). With this strategy the scheduler arranges processes with the least estimated processing time remaining to be next in the queue. This requires avant-garde cognition or estimations about the time required for a process to complete.

- If a shorter process arrives during some other procedure' execution, the currently running process is interrupted (known as preemption), dividing that procedure into two split computing blocks. This creates excess overhead through additional context switching. The scheduler must also place each incoming procedure into a specific place in the queue, creating additional overhead.

- This algorithm is designed for maximum throughput in virtually scenarios.

- Waiting fourth dimension and response time increment as the process'due south computational requirements increase. Since turnaround fourth dimension is based on waiting time plus processing time, longer processes are significantly afflicted by this. Overall waiting time is smaller than FIFO, however since no procedure has to look for the termination of the longest procedure.

- No particular attention is given to deadlines, the programmer can only attempt to make processes with deadlines as short as possible.

- Starvation is possible, especially in a busy system with many pocket-size processes beingness run.

- To utilize this policy we should have at least two processes of different priority

Stock-still priority pre-emptive scheduling [edit]

The operating system assigns a fixed priority rank to every process, and the scheduler arranges the processes in the ready queue in order of their priority. Lower-priority processes become interrupted past incoming college-priority processes.

- Overhead is not minimal, nor is information technology meaning.

- FPPS has no item advantage in terms of throughput over FIFO scheduling.

- If the number of rankings is limited, it can exist characterized as a drove of FIFO queues, one for each priority ranking. Processes in lower-priority queues are selected but when all of the higher-priority queues are empty.

- Waiting time and response time depend on the priority of the process. College-priority processes have smaller waiting and response times.

- Deadlines can be met past giving processes with deadlines a higher priority.

- Starvation of lower-priority processes is possible with large numbers of high-priority processes queuing for CPU time.

Round-robin scheduling [edit]

The scheduler assigns a stock-still time unit of measurement per process, and cycles through them. If procedure completes within that time-slice it gets terminated otherwise it is rescheduled subsequently giving a chance to all other processes.

- RR scheduling involves extensive overhead, peculiarly with a small time unit of measurement.

- Balanced throughput between FCFS/ FIFO and SJF/SRTF, shorter jobs are completed faster than in FIFO and longer processes are completed faster than in SJF.

- Good average response time, waiting time is dependent on number of processes, and not average procedure length.

- Because of high waiting times, deadlines are rarely met in a pure RR organisation.

- Starvation can never occur, since no priority is given. Order of fourth dimension unit resource allotment is based upon process arrival time, similar to FIFO.

- If Time-Slice is large it becomes FCFS /FIFO or if information technology is short then it becomes SJF/SRTF.

Multilevel queue scheduling [edit]

This is used for situations in which processes are hands divided into dissimilar groups. For example, a mutual division is made betwixt foreground (interactive) processes and background (batch) processes. These two types of processes accept different response-fourth dimension requirements and so may accept dissimilar scheduling needs. It is very useful for shared retention problems.

Work-conserving schedulers [edit]

A work-conserving scheduler is a scheduler that ever tries to keep the scheduled resource busy, if in that location are submitted jobs prepare to exist scheduled. In contrast, a non-work conserving scheduler is a scheduler that, in some cases, may leave the scheduled resources idle despite the presence of jobs set up to be scheduled.

Scheduling optimization problems [edit]

There are several scheduling issues in which the goal is to decide which job goes to which station at what time, such that the full makespan is minimized:

- Chore shop scheduling – in that location are n jobs and m identical stations. Each job should be executed on a single machine. This is commonly regarded every bit an online problem.

- Open up-store scheduling – there are due north jobs and m different stations. Each job should spend some fourth dimension at each station, in a free lodge.

- Catamenia store scheduling – at that place are north jobs and 1000 unlike stations. Each job should spend some time at each station, in a pre-determined order.

Manual scheduling [edit]

A very common method in embedded systems is to schedule jobs manually. This can for instance be washed in a fourth dimension-multiplexed mode. Sometimes the kernel is divided in three or more parts: Manual scheduling, preemptive and interrupt level. Exact methods for scheduling jobs are often proprietary.

- No resource starvation problems

- Very high predictability; allows implementation of difficult existent-time systems

- About no overhead

- May not be optimal for all applications

- Effectiveness is completely dependent on the implementation

Choosing a scheduling algorithm [edit]

When designing an operating system, a programmer must consider which scheduling algorithm will perform best for the use the system is going to see. At that place is no universal "best" scheduling algorithm, and many operating systems use extended or combinations of the scheduling algorithms above.

For example, Windows NT/XP/Vista uses a multilevel feedback queue, a combination of fixed-priority preemptive scheduling, round-robin, and first in, first out algorithms. In this system, threads can dynamically increase or decrease in priority depending on if information technology has been serviced already, or if it has been waiting extensively. Every priority level is represented past its own queue, with circular-robin scheduling amidst the high-priority threads and FIFO among the lower-priority ones. In this sense, response time is short for almost threads, and brusk but critical arrangement threads get completed very quickly. Since threads can simply use 1 time unit of measurement of the round-robin in the highest-priority queue, starvation tin can be a problem for longer high-priority threads.

Operating system process scheduler implementations [edit]

The algorithm used may be as simple every bit circular-robin in which each process is given equal time (for case 1 ms, usually betwixt 1 ms and 100 ms) in a cycling list. So, procedure A executes for 1 ms, and then process B, so procedure C, then dorsum to process A.

More advanced algorithms take into account process priority, or the importance of the process. This allows some processes to employ more time than other processes. The kernel always uses whatever resources it needs to ensure proper functioning of the system, and and so can exist said to have infinite priority. In SMP systems, processor analogousness is considered to increase overall system performance, fifty-fifty if information technology may crusade a procedure itself to run more than slowly. This mostly improves performance by reducing cache thrashing.

OS/360 and successors [edit]

IBM OS/360 was available with three dissimilar schedulers. The differences were such that the variants were ofttimes considered three dissimilar operating systems:

- The Unmarried Sequential Scheduler pick, likewise known as the Principal Command Plan (PCP) provided sequential execution of a single stream of jobs.

- The Multiple Sequential Scheduler option, known every bit Multiprogramming with a Fixed Number of Tasks (MFT) provided execution of multiple concurrent jobs. Execution was governed by a priority which had a default for each stream or could exist requested separately for each job. MFT version Ii added subtasks (threads), which executed at a priority based on that of the parent job. Each job stream defined the maximum amount of retention which could be used past any job in that stream.

- The Multiple Priority Schedulers choice, or Multiprogramming with a Variable Number of Tasks (MVT), featured subtasks from the start; each job requested the priority and memory it required before execution.

After virtual storage versions of MVS added a Workload Director feature to the scheduler, which schedules processor resources according to an elaborate scheme defined by the installation.

Windows [edit]

Very early MS-DOS and Microsoft Windows systems were non-multitasking, and every bit such did not feature a scheduler. Windows 3.1x used a non-preemptive scheduler, meaning that information technology did non interrupt programs. Information technology relied on the program to end or tell the Bone that it didn't need the processor so that it could move on to another process. This is usually called cooperative multitasking. Windows 95 introduced a rudimentary preemptive scheduler; however, for legacy support opted to permit xvi bit applications run without preemption.[10]

Windows NT-based operating systems use a multilevel feedback queue. 32 priority levels are defined, 0 through to 31, with priorities 0 through 15 being "normal" priorities and priorities 16 through 31 existence soft real-time priorities, requiring privileges to assign. 0 is reserved for the Operating System. User interfaces and APIs work with priority classes for the process and the threads in the process, which are and so combined by the system into the accented priority level.

The kernel may alter the priority level of a thread depending on its I/O and CPU usage and whether information technology is interactive (i.e. accepts and responds to input from humans), raising the priority of interactive and I/O bounded processes and lowering that of CPU leap processes, to increase the responsiveness of interactive applications.[11] The scheduler was modified in Windows Vista to employ the cycle counter register of modern processors to keep rails of exactly how many CPU cycles a thread has executed, rather than only using an interval-timer interrupt routine.[12] Vista also uses a priority scheduler for the I/O queue then that disk defragmenters and other such programs do not interfere with foreground operations.[thirteen]

Classic Mac OS and macOS [edit]

Mac Bone nine uses cooperative scheduling for threads, where ane process controls multiple cooperative threads, and as well provides preemptive scheduling for multiprocessing tasks. The kernel schedules multiprocessing tasks using a preemptive scheduling algorithm. All Process Manager processes run within a special multiprocessing task, chosen the "blue task". Those processes are scheduled cooperatively, using a round-robin scheduling algorithm; a process yields control of the processor to another process by explicitly calling a blocking function such as WaitNextEvent. Each procedure has its own copy of the Thread Director that schedules that procedure's threads cooperatively; a thread yields command of the processor to another thread by calling YieldToAnyThread or YieldToThread.[14]

macOS uses a multilevel feedback queue, with 4 priority bands for threads – normal, system high priority, kernel way merely, and real-time.[15] Threads are scheduled preemptively; macOS as well supports cooperatively scheduled threads in its implementation of the Thread Manager in Carbon.[14]

AIX [edit]

In AIX Version 4 there are three possible values for thread scheduling policy:

- Outset In, First Out: Once a thread with this policy is scheduled, it runs to completion unless it is blocked, it voluntarily yields control of the CPU, or a college-priority thread becomes dispatchable. Only fixed-priority threads tin have a FIFO scheduling policy.

- Round Robin: This is similar to the AIX Version 3 scheduler round-robin scheme based on ten ms time slices. When a RR thread has control at the finish of the fourth dimension slice, it moves to the tail of the queue of dispatchable threads of its priority. But fixed-priority threads tin can have a Round Robin scheduling policy.

- OTHER: This policy is divers by POSIX1003.4a every bit implementation-divers. In AIX Version 4, this policy is divers to exist equivalent to RR, except that it applies to threads with not-fixed priority. The recalculation of the running thread's priority value at each clock interrupt means that a thread may lose control because its priority value has risen above that of another dispatchable thread. This is the AIX Version 3 behavior.

Threads are primarily of interest for applications that currently consist of several asynchronous processes. These applications might impose a lighter load on the system if converted to a multithreaded structure.

AIX 5 implements the post-obit scheduling policies: FIFO, round robin, and a off-white round robin. The FIFO policy has three different implementations: FIFO, FIFO2, and FIFO3. The round robin policy is named SCHED_RR in AIX, and the off-white round robin is called SCHED_OTHER.[sixteen]

Linux [edit]

A highly simplified structure of the Linux kernel, which includes procedure schedulers, I/O schedulers, and bundle schedulers

Linux 2.four [edit]

In Linux 2.4, an O(north) scheduler with a multilevel feedback queue with priority levels ranging from 0 to 140 was used; 0–99 are reserved for real-fourth dimension tasks and 100–140 are considered prissy task levels. For real-time tasks, the time breakthrough for switching processes was approximately 200 ms, and for nice tasks approximately x ms.[ citation needed ] The scheduler ran through the run queue of all ready processes, letting the highest priority processes go first and run through their time slices, after which they will exist placed in an expired queue. When the agile queue is empty the expired queue will go the active queue and vice versa.

However, some enterprise Linux distributions such as SUSE Linux Enterprise Server replaced this scheduler with a backport of the O(ane) scheduler (which was maintained by Alan Cox in his Linux ii.4-ac Kernel series) to the Linux two.four kernel used past the distribution.

Linux ii.6.0 to Linux two.6.22 [edit]

In versions 2.6.0 to 2.6.22, the kernel used an O(ane) scheduler adult by Ingo Molnar and many other kernel developers during the Linux two.5 development. For many kernel in time frame, Con Kolivas developed patch sets which improved interactivity with this scheduler or fifty-fifty replaced it with his own schedulers.

Since Linux ii.6.23 [edit]

Con Kolivas' work, most significantly his implementation of "fair scheduling" named "Rotating Staircase Borderline", inspired Ingo Molnár to develop the Completely Off-white Scheduler every bit a replacement for the earlier O(1) scheduler, crediting Kolivas in his announcement.[17] CFS is the first implementation of a fair queuing process scheduler widely used in a full general-purpose operating system.[18]

The Completely Fair Scheduler (CFS) uses a well-studied, classic scheduling algorithm called fair queuing originally invented for packet networks. Fair queuing had been previously practical to CPU scheduling nether the name stride scheduling. The off-white queuing CFS scheduler has a scheduling complexity of , where is the number of tasks in the runqueue. Choosing a task can be done in constant time, merely reinserting a job after it has run requires operations, because the run queue is implemented every bit a cherry-red–black tree.

The Encephalon Fuck Scheduler, also created by Con Kolivas, is an alternative to the CFS.

FreeBSD [edit]

FreeBSD uses a multilevel feedback queue with priorities ranging from 0–255. 0–63 are reserved for interrupts, 64–127 for the top half of the kernel, 128–159 for real-time user threads, 160–223 for time-shared user threads, and 224–255 for idle user threads. Also, like Linux, it uses the agile queue setup, but it besides has an idle queue.[xix]

NetBSD [edit]

NetBSD uses a multilevel feedback queue with priorities ranging from 0–223. 0–63 are reserved for time-shared threads (default, SCHED_OTHER policy), 64–95 for user threads which entered kernel infinite, 96-128 for kernel threads, 128–191 for user real-time threads (SCHED_FIFO and SCHED_RR policies), and 192–223 for software interrupts.

Solaris [edit]

Solaris uses a multilevel feedback queue with priorities ranging betwixt 0 and 169. Priorities 0–59 are reserved for time-shared threads, 60–99 for organization threads, 100–159 for real-time threads, and 160–169 for depression priority interrupts. Unlike Linux,[nineteen] when a process is done using its fourth dimension quantum, it is given a new priority and put dorsum in the queue. Solaris 9 introduced two new scheduling classes, namely fixed priority class and off-white share class. The threads with fixed priority have the same priority range as that of the fourth dimension-sharing class, merely their priorities are not dynamically adapted. The fair scheduling class uses CPU shares to prioritize threads for scheduling decisions. CPU shares indicate the entitlement to CPU resources. They are allocated to a set up of processes, which are collectively known as a project.[7]

Summary [edit]

| Operating System | Preemption | Algorithm |

|---|---|---|

| Amiga OS | Yes | Prioritized round-robin scheduling |

| FreeBSD | Yes | Multilevel feedback queue |

| Linux kernel before 2.6.0 | Yes | Multilevel feedback queue |

| Linux kernel two.half-dozen.0–two.6.23 | Yes | O(1) scheduler |

| Linux kernel subsequently 2.vi.23 | Yes | Completely Off-white Scheduler |

| classic Mac Os pre-9 | None | Cooperative scheduler |

| Mac OS ix | Some | Preemptive scheduler for MP tasks, and cooperative for processes and threads |

| macOS | Yes | Multilevel feedback queue |

| NetBSD | Yes | Multilevel feedback queue |

| Solaris | Yeah | Multilevel feedback queue |

| Windows 3.1x | None | Cooperative scheduler |

| Windows 95, 98, Me | Half | Preemptive scheduler for 32-flake processes, and cooperative for xvi-fleck processes |

| Windows NT (including 2000, XP, Vista, 7, and Server) | Yeah | Multilevel feedback queue |

Come across also [edit]

- Activity choice problem

- Aging (scheduling)

- Atropos scheduler

- Automated planning and scheduling

- Cyclic executive

- Dynamic priority scheduling

- Foreground-background

- Interruptible operating system

- To the lowest degree slack time scheduling

- Lottery scheduling

- Priority inversion

- Procedure states

- Queuing Theory

- Rate-monotonic scheduling

- Resource-Chore Network

- Scheduling (production processes)

- Stochastic scheduling

- Time-utility function

Notes [edit]

- ^ C. L., Liu; James Westward., Layland (January 1973). "Scheduling Algorithms for Multiprogramming in a Hard-Real-Fourth dimension Environment". Journal of the ACM. ACM. twenty (ane): 46–61. doi:10.1145/321738.321743. S2CID 207669821.

Nosotros define the response time of a request for a certain task to exist the time span between the request and the end of the response to that request.

- ^ Kleinrock, Leonard (1976). Queueing Systems, Vol. 2: Computer Applications (one ed.). Wiley-Interscience. p. 171. ISBN047149111X.

For a customer requiring x sec of service, his response fourth dimension will equal his service time 10 plus his waiting fourth dimension.

- ^ Feitelson, Dror One thousand. (2015). Workload Modeling for Computer Systems Functioning Evaluation. Cambridge University Press. Section 8.4 (Page 422) in Version 1.03 of the freely available manuscript. ISBN9781107078239 . Retrieved 2015-10-17 .

if we denote the time that a chore waits in the queue by tdue west, and the time it actually runs by tr, then the response fourth dimension is r = tw + tr.

- ^ Silberschatz, Abraham; Galvin, Peter Baer; Gagne, Greg (2012). Operating Organisation Concepts (ix ed.). Wiley Publishing. p. 187. ISBN978-0470128725.

In an interactive organisation, turnaround time may non exist the best criterion. Often, a process tin can produce some output adequately early and can continue computing new results while previous results are beingness output to the user. Thus, some other measure is the time from the submission of a request until the outset response is produced. This measure, called response time, is the time it takes to beginning responding, not the fourth dimension information technology takes to output the response.

- ^ Paul Krzyzanowski (2014-02-19). "Process Scheduling: Who gets to run side by side?". cs.rutgers.edu . Retrieved 2015-01-11 .

- ^ Raphael Finkel. "An Operating Systems Vade Mecum". Prentice Hall. 1988. "chapter ii: Time Management". p. 27.

- ^ a b c Abraham Silberschatz, Peter Baer Galvin and Greg Gagne (2013). Operating Arrangement Concepts. Vol. ix. John Wiley & Sons, Inc. ISBN978-one-118-06333-0.

{{cite book}}: CS1 maint: uses authors parameter (link) - ^ Robert Kroeger (2004). "Access Command for Independently-authored Realtime Applications". UWSpace. http://hdl.handle.net/10012/1170 . Department "ii.6 Admission Control". p. 33.

- ^ Guowang Miao; Jens Zander; Ki Won Sung; Ben Slimane (2016). Fundamentals of Mobile Data Networks. Cambridge University Press. ISBN978-1107143210.

- ^ Early Windows at the Wayback Car (archive index)

- ^ Sriram Krishnan. "A Tale of Two Schedulers Windows NT and Windows CE". Archived from the original on July 22, 2012.

- ^ "Windows Administration: Inside the Windows Vista Kernel: Part 1". Technet.microsoft.com. 2016-11-xiv. Retrieved 2016-12-09 .

- ^ "Archived copy". web log.gabefrost.com. Archived from the original on nineteen February 2008. Retrieved xv January 2022.

{{cite web}}: CS1 maint: archived re-create as title (link) - ^ a b "Technical Note TN2028: Threading Architectures". developer.apple.com . Retrieved 2019-01-15 .

- ^ "Mach Scheduling and Thread Interfaces". programmer.apple.com . Retrieved 2019-01-15 .

- ^ [one] Archived 2011-08-eleven at the Wayback Machine

- ^ Molnár, Ingo (2007-04-13). "[patch] Modular Scheduler Cadre and Completely Fair Scheduler [CFS]". linux-kernel (Mailing list).

- ^ Tong Li; Dan Baumberger; Scott Hahn. "Efficient and Scalable Multiprocessor Off-white Scheduling Using Distributed Weighted Round-Robin" (PDF). Happyli.org . Retrieved 2016-12-09 .

- ^ a b "Comparison of Solaris, Linux, and FreeBSD Kernels" (PDF). Archived from the original (PDF) on August 7, 2008.

References [edit]

- Błażewicz, Jacek; Ecker, Chiliad.H.; Pesch, East.; Schmidt, One thousand.; Weglarz, J. (2001). Scheduling figurer and manufacturing processes (2 ed.). Berlin [u.a.]: Springer. ISBN3-540-41931-4.

- Stallings, William (2004). Operating Systems Internals and Pattern Principles (fourth ed.). Prentice Hall. ISBN0-13-031999-vi.

- Information on the Linux 2.six O(1)-scheduler

Further reading [edit]

- Operating Systems: Three Piece of cake Pieces past Remzi H. Arpaci-Dusseau and Andrea C. Arpaci-Dusseau. Arpaci-Dusseau Books, 2014. Relevant chapters: Scheduling: Introduction Multi-level Feedback Queue Proportional-share Scheduling Multiprocessor Scheduling

- Brief discussion of Job Scheduling algorithms

- Agreement the Linux Kernel: Chapter 10 Process Scheduling

- Kerneltrap: Linux kernel scheduler manufactures

- AIX CPU monitoring and tuning

- Josh Aas' introduction to the Linux 2.half dozen.8.1 CPU scheduler implementation

- Peter Brucker, Sigrid Knust. Complication results for scheduling bug [2]

- TORSCHE Scheduling Toolbox for Matlab is a toolbox of scheduling and graph algorithms.

- A survey on cellular networks packet scheduling

- Large-scale cluster management at Google with Borg

Source: https://en.wikipedia.org/wiki/Scheduling_(computing)

Posted by: readybunpremong.blogspot.com

0 Response to "How Long Does A Process Server Have To Wait Between Service Attempts?"

Post a Comment